January 7, 2025

Imagine a world where AI agents collaborate seamlessly to solve complex challenges, mirroring human teamwork. Microsoft’s AutoGen, an innovative open-source framework, is turning this vision into reality. By enabling scalable, agentic AI systems, AutoGen is setting a new standard for next-generation AI applications.

Launched last year, AutoGen empowers developers to craft sophisticated workflows through multi-agent conversations, offering unprecedented flexibility and scalability. Let’s dive into what makes AutoGen a game-changer in the AI development ecosystem.

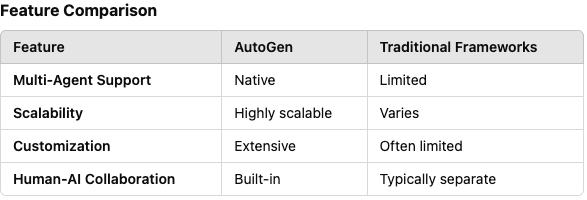

In the rapidly evolving AI landscape, the ability to design resilient, scalable, and distributed agent systems is more critical than ever. AutoGen takes the complexity out of this process, offering an easy-to-use framework that amplifies collaboration among AI agents. Whether it’s orchestrating event-driven applications or solving complex problems autonomously, AutoGen is redefining what’s possible in artificial intelligence.

AutoGen is designed to scale and integrate seamlessly with existing AI ecosystems, supporting:

AutoGen’s plug-and-play compatibility extends to major frameworks, enabling developers to unlock its potential with tools like:

Here’s how to build an interactive conversation between a student and a teacher agent using AutoGen:

import autogen

# Configuration for the LLM

config_list = autogen.config_list_from_json(

"OAI_CONFIG_LIST",

filter_dict={"model": ["gpt-4-turbo"]}

)

llm_config = {"config_list": config_list}

# Create a student agent

student_agent = autogen.ConversableAgent(

name="Student_Agent",

system_message="You are a curious student eager to learn English grammar.",

llm_config=llm_config,

)

# Create a teacher agent

teacher_agent = autogen.ConversableAgent(

name="Teacher_Agent",

system_message="You are an experienced English teacher. Provide clear, concise explanations.",

llm_config=llm_config,

)

# Initiate the conversation

chat_result = student_agent.initiate_chat(

teacher_agent,

message="Can you explain when to use 'whom' instead of 'who'?",

summary_method="reflection_with_llm",

max_turns=3,

)

# Print the conversation summary

print(chat_result.summary)

While AutoGen’s innovative design unlocks vast potential, it comes with challenges:

AutoGen reflects the broader trend in AI towards autonomous systems and collaborative workflows. As industries increasingly adopt AI, frameworks like AutoGen are poised to lead the charge in creating scalable, intelligent systems.

Here’s how to join the AutoGen revolution:

pip install pyautogen

export OPENAI_API_KEY="your_api_key"

AutoGen isn’t just a framework—it’s a paradigm shift in AI development. By combining modular design, multi-agent capabilities, and powerful integrations, it empowers developers to unlock new possibilities in automation, collaboration, and intelligence.

Ready to revolutionize your AI projects? Start building with AutoGen today.